LLaMA 2 is the second generation of a fast and powerful artificial intelligence AI that Meta initially designed for research Meta officially released LLaMA 2 in 2023 an open. Llama 2 Community License Agreement Agreement means the terms and conditions for use reproduction distribution and. Llama 2 is also available under a permissive commercial license whereas Llama 1 was limited to non-commercial use Llama 2 is capable of processing longer prompts than Llama 1 and is. The greatest thing since the sliced bread dropped last week in the form of Llama-2 Meta released it with an open license for both research commercial purposes. Llama 2s license again not only permits commercial use the model and its weights are available to virtually anyone who agrees to the license and commits to using Llama 2..

Customize Llamas personality by clicking the settings button I can explain concepts write poems and code solve logic puzzles or even name your pets Send me a message or upload an. Llama 2 outperforms other open source language models on many external benchmarks including reasoning coding proficiency and knowledge tests Llama 2 The next generation of our open. Experience the power of Llama 2 the second-generation Large Language Model by Meta Choose from three model sizes pre-trained on 2 trillion tokens and fine-tuned with over a million human. . We have collaborated with Vertex AI from Google Cloud to fully integrate Llama 2 offering pre-trained chat and CodeLlama in various sizes Getting started from here note that you may need to..

. Description This repo contains GGUF format model files for Meta Llama 2s Llama 2 70B Chat About GGUF GGUF is a new format introduced by the llamacpp team on August 21st 2023. AWQ model s for GPU inference GPTQ models for GPU inference with multiple quantisation parameter options 2 3 4 5 6 and 8-bit GGUF models for CPUGPU inference. 3 min read Aug 5 2023 Photo by Miranda Salzgeber on Unsplash On Medium I mainly discussed QLoRa to run large language models LLM on consumer hardware. I was testing llama-2 70b q3_K_S at 32k context with the following arguments -c 32384 --rope-freq-base 80000 --rope-freq-scale 05 These seem to be settings for 16k..

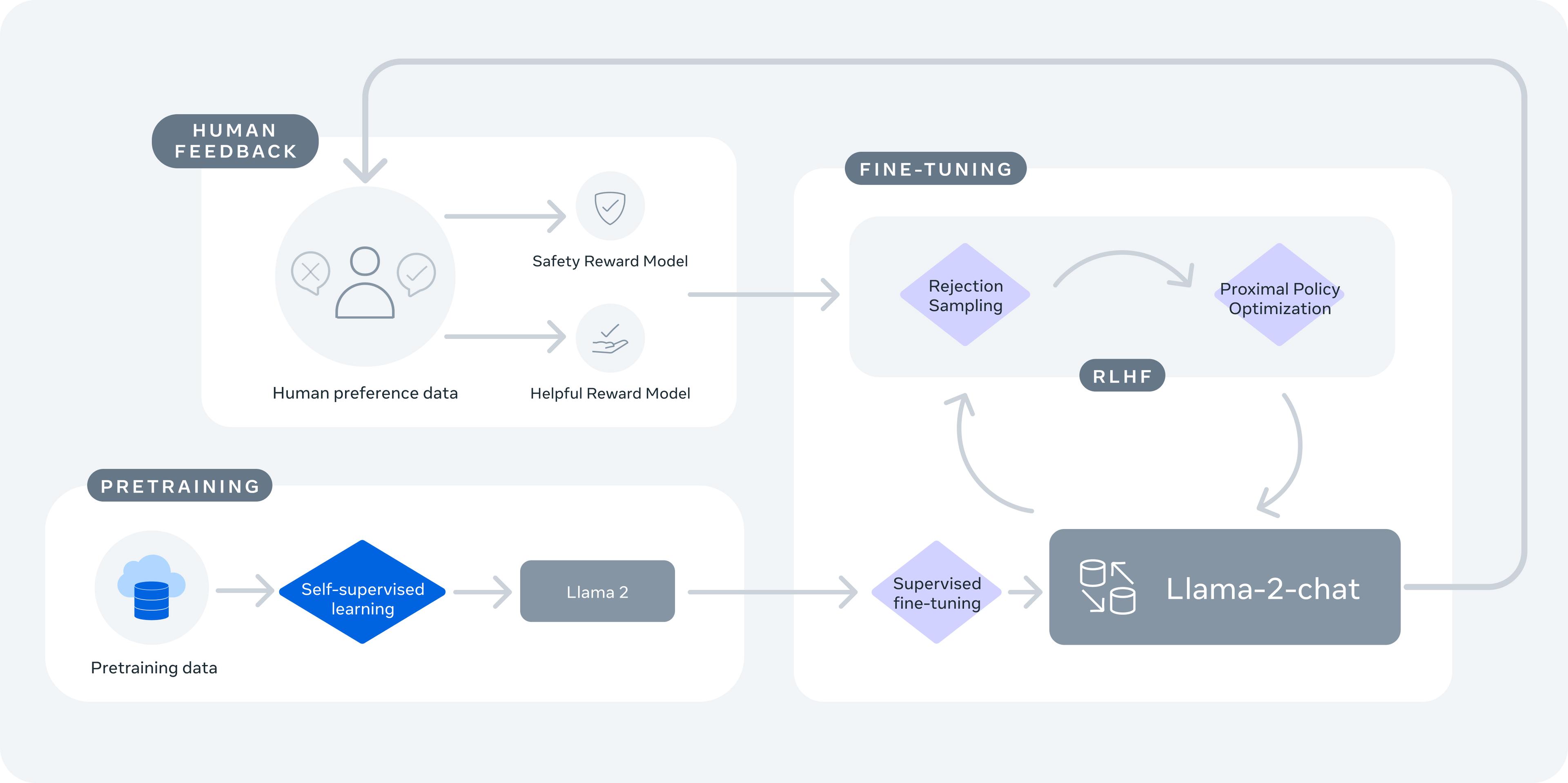

In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. The LLaMA-2 paper describes the architecture in good detail to help data scientists recreate fine-tune the models Unlike OpenAI papers where you have to deduce it. Jose Nicholas Francisco Published on 082323 Updated on 101123 Llama 1 vs Metas Genius Breakthrough in AI Architecture Research Paper Breakdown First. 6 min read Oct 8 2023 Llama 2 is a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. In this work we develop and release Llama 2 a family of pretrained and fine-tuned LLMs Llama 2 and Llama 2-Chat at scales up to 70B parameters On the series of helpfulness and safety..

Comments